What is a Neural Network?

A neural network is a computational model inspired by the human brain. It’s designed to recognize patterns in data and make predictions. At its most fundamental level, a neural network consists of layers of interconnected processing units called neurons or nodes.

The Core Components (The Blocks)

A typical neural network is organized into three main types of layers:

- Input Layer: This is the first layer of the network. Each neuron in this layer represents a specific feature of the input data. For example, in a model that predicts house prices, each neuron might represent a feature like the square footage, number of bedrooms, or location. The input layer simply passes this raw data to the next layer.

- Hidden Layers: These are the intermediate layers between the input and output layers. The “magic” of a neural network happens here. Each neuron in a hidden layer takes input from the previous layer, applies a mathematical operation to it, and passes the result on. A “deep” neural network is simply a network with many hidden layers.

- Output Layer: This is the final layer that produces the network’s prediction. The number of neurons in this layer depends on the task:

- For binary classification (e.g., spam or not spam), it might have one neuron.

- For multi-class classification (e.g., classifying a picture as a cat, dog, or bird), it would have one neuron for each class.

- For regression (e.g., predicting a continuous value like house price), it might have one neuron.

How a Neural Network Works

The process of a neural network making a prediction, known as forward propagation, can be broken down into these steps:

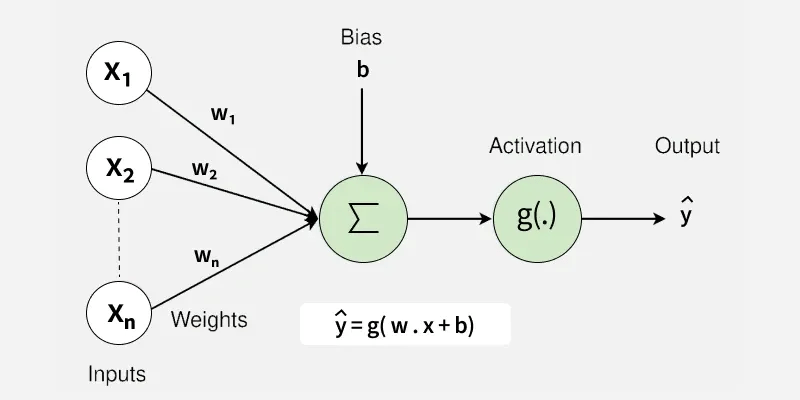

Step 1: Weighted Input & Summation

Every connection between neurons has a numerical value called a weight. When a neuron receives inputs from the previous layer, it multiplies each input by its corresponding weight. This is a crucial step because the weights determine the importance of each input. The neuron then sums all of these weighted inputs.

Step 2: The Activation Function

After summing the weighted inputs, the result is passed through an activation function. This function introduces non-linearity into the network, allowing it to learn complex patterns. Without activation functions, a neural network could only learn linear relationships, severely limiting its power. Common activation functions include ReLU, sigmoid, and tanh.

Step 3: Forward Propagation

This process of weighted summation and activation is repeated for every neuron in the hidden layers, with the output of one layer becoming the input for the next. The final output of the network is the prediction generated by the output layer.

Step 4: Backpropagation (The Learning Process)

Initially, the network’s predictions are random. The model learns through a process called backpropagation, where it adjusts its weights to improve accuracy.

- The network compares its prediction to the correct answer and calculates the error or “loss.”

- This error is then propagated backward through the network, from the output layer back to the input layer.

- The backpropagation algorithm uses this error to calculate how much each weight contributed to the incorrect prediction.

- The weights are then slightly adjusted to minimize the error in the next round.

This iterative process of forward propagation to make a prediction and backpropagation to correct the error is how a neural network learns from its experience.

In summary, a neural network is a system of interconnected layers of neurons that learns by adjusting the weights of its connections based on the difference between its predictions and the correct answers.