Readily Available (Off-the-Shelf) Tools

These are pre-built, general-purpose tools that are ready to use with little to no setup. They are designed to serve a wide audience and handle common tasks across various domains.

Key Characteristics

- Fast Deployment: You can start using them immediately, often through a web interface or a simple API.

- Low Initial Cost: They typically operate on a subscription or pay-as-you-go model, with no large upfront development expenses.

- Generalist Capabilities: They are trained on vast, public datasets, making them versatile but not specialized.

- Limited Customization: You can adjust the prompt or a few settings, but you can’t change the underlying model or its core functionality.

Examples:

- ChatGPT or Gemini: These are widely used for general text tasks like answering questions, writing emails, and brainstorming ideas. You can’t fine-tune them on your company’s private documents without using an API.

- DALL-E or Midjourney: These are public-facing image generators that create art and images based on text descriptions. They are not trained on a specific brand’s assets or style guide.

- GitHub Copilot: A coding assistant that provides general code suggestions based on its broad training data, but it isn’t specifically trained on a company’s internal code base or coding standards.

Custom-Built (Need-Based) GenAI Tools

These are AI solutions specifically developed and fine-tuned for a particular organization’s unique needs, data, and workflows.

Key Characteristics:

- Longer Development Time: Building a custom solution requires a significant investment in time for data collection, training, and fine-tuning.

- Higher Upfront Cost: Requires a dedicated team or a development partner, with costs for infrastructure, training, and maintenance.

- Specialist Capabilities: The model is trained on proprietary data (e.g., internal documents, customer interactions, or specific product images), making it highly accurate for a specific niche.

- High Customization: You have full control over the model’s behavior, features, and integration with existing systems.

Examples:

- Personalized E-commerce AI: A retail company could build a generative AI tool trained on its specific product catalog, customer reviews, and brand voice to automatically write unique, on-brand product descriptions and marketing copy.

- Internal Knowledge Base Chatbot: A company could fine-tune an LLM on its private internal documents, like employee handbooks and technical manuals, to create a chatbot that provides accurate and secure answers to employee questions.

- Fraud Detection for a Bank: A financial institution might train a generative AI model on its transaction data to create synthetic fraudulent transaction patterns. This helps them test and improve their fraud detection systems in a secure environment.

Ultimately, off-the-shelf tools are great for general use and getting started quickly, while custom-built applications provide a precise, powerful, and scalable solution for solving specific business problems and gaining a competitive advantage.

Building Custom AI Applications

Building a custom AI application isn’t a one-size-fits-all process. There are several distinct approaches, ranging from simple and cost-effective to complex and resource-intensive. The best option depends on your specific needs, budget, and the level of customization required.

Here are the primary options for building custom generative AI Tools:

1. Prompt Engineering

This is the simplest and fastest way to customize an off-the-shelf tool. It doesn’t involve any changes to the model itself. Instead, you craft very specific, detailed, and well-structured prompts to guide a general-purpose model’s output.

- How it works: You use a pre-trained model like Gemini or ChatGPT and provide clear instructions, context, and examples directly in the prompt to get a desired response.

- Best for: Quick experiments, one-off tasks, and applications where the domain knowledge is limited or can be included in the prompt.

- Pros: No coding or AI expertise required; low cost; immediate results.

- Cons: Limited by the model’s knowledge cutoff; prone to “hallucinations” (generating plausible but incorrect information); outputs can be inconsistent.

2. Retrieval-Augmented Generation (RAG)

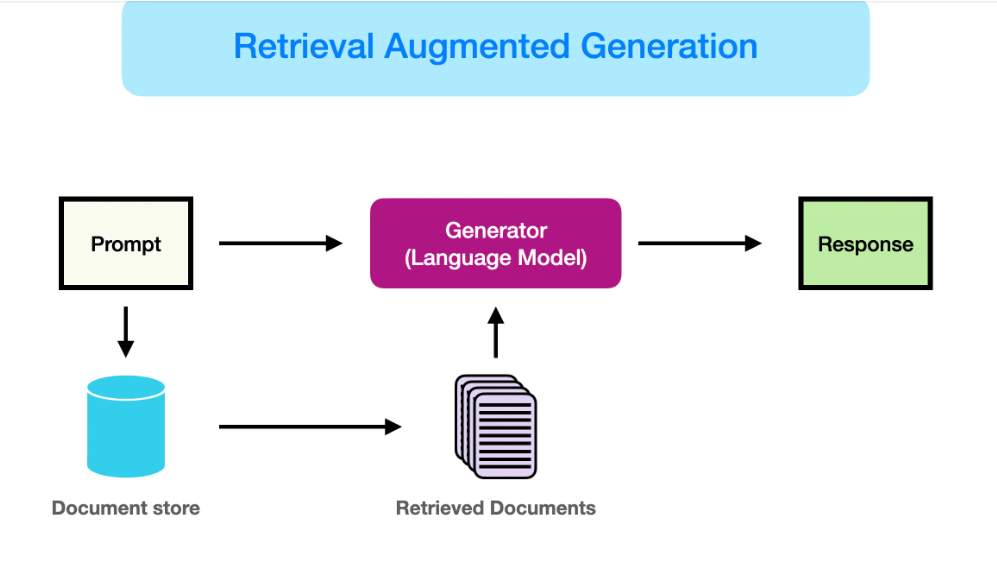

This is a popular and highly effective method for grounding a general-purpose model in your specific, private data. It combines an LLM with a retrieval system to ensure the generated output is based on authoritative, up-to-date information.

- How it works: When a user submits a query, the system first retrieves relevant information from your private documents, databases, or websites. This retrieved information is then added to the prompt, and the LLM uses both the prompt and the retrieved data to generate a response.

- Best for: Building custom chatbots for a company’s internal knowledge base, creating a customer support tool based on product manuals, or providing up-to-date information for which the base model’s training data is outdated.

- Pros: Highly accurate outputs grounded in your data; cost-effective (no need to retrain a huge model); easy to update the knowledge base without retraining.

- Cons: Requires setting up and maintaining a retrieval system (e.g., a vector database); can still suffer from poor outputs if the retrieval is not accurate.

3. Fine-Tuning

This approach involves taking a pre-trained foundation model and continuing its training on a smaller, domain-specific dataset. This adjusts the model’s parameters, teaching it to align with a specific style, tone, or task.

- How it works: You start with an existing model (e.g., a smaller open-source model) and provide it with a curated dataset of examples (e.g., thousands of question-and-answer pairs or a specific style of writing). The model’s weights are slightly adjusted to reflect these new patterns.

- Best for: Making a model sound like your brand, ensuring it follows specific instructions for a creative task, or improving its performance on a specialized technical domain.

- Pros: Can achieve very high performance for a specific task; makes the model’s behavior more consistent; the resulting model is smaller and faster than the original.

- Cons: Requires a high-quality dataset and significant computational resources; can be complex to set up; still expensive compared to RAG.

4. Training from Scratch

This is the most extreme and resource-intensive option, involving building a foundation model from the ground up. This is typically reserved for large tech companies or research institutions.

- How it works: You start with a blank slate and train a model on a massive, proprietary dataset that you have collected and curated. This gives you complete control over the model’s architecture, training process, and final capabilities.

- Best for: Creating a model with unique capabilities or a truly proprietary understanding of a domain where no existing foundation model is sufficient.

- Pros: Complete control and ownership; potential for creating a market-leading, highly differentiated product.

- Cons: Extremely expensive and time-consuming (can take millions of dollars and months of GPU time); requires a massive team of highly specialized experts.

Chat interface to an LLM vs RAG

A RAG system is a custom application with a specific architecture, whereas a “normal chat interface” is a pre-built, off-the-shelf tool. Here’s the key difference:

The Chat Interface: A tool like Gemini Chat is designed to provide responses based on its massive, pre-trained knowledge base. It’s a generalist model that can handle a huge variety of queries, but it doesn’t have a built-in mechanism to connect to and retrieve information from your private, proprietary, or real-time data sources (like your company’s internal documents or a live database).

The RAG Application: This is a custom solution you build that sits between the user and the LLM. When a user enters a query, the RAG system first performs a retrieval step on your specific data. It then takes the most relevant information it found and injects it into a prompt, which it sends to the LLM. The LLM then generates a response based on this augmented prompt.

So, while you can manually copy and paste text from your own documents into a chat prompt (which is a form of RAG), a true RAG system automates the entire retrieval process. You would need to use an API to connect a retrieval system (like a vector database) to the LLM.

RAG vs Uploading a file into LLM’s chat interface

Retrieval-Augmented Generation (RAG) and simply uploading a file into an LLM’s chat interface represent distinct methods for providing external information to a Large Language Model (LLM).

Uploading a File in Chat Interface:

- This method directly injects the content of the uploaded file into the LLM’s context window for a specific interaction.

- The LLM processes the entire uploaded document (or a portion of it, limited by token limits) as part of the immediate conversation.

- It is a temporary and session-specific approach, providing the LLM with information relevant only for the current query or a series of related queries within that session.

- The LLM’s ability to utilize this information is constrained by its context window size, meaning very large documents might be truncated or require manual summarization.

Retrieval-Augmented Generation (RAG):

- RAG is a system architecture that integrates an information retrieval component with an LLM.

- Instead of directly feeding the entire document, RAG involves indexing and storing external knowledge (from various sources, including uploaded files) in a searchable format, often a vector database.

- When a query is received, the RAG system first retrieves relevant chunks or passages from this external knowledge base based on the query’s semantic similarity.

- These retrieved snippets are then provided to the LLM as context alongside the user’s query, allowing the LLM to generate a response informed by specific, targeted information.

- This approach offers scalability and efficiency for large datasets, as the LLM only processes the most relevant information, rather than the entire document. It also helps to reduce hallucinations and ensure responses are grounded in factual data.